Testing OpenAPI specification compliance in Quarkus with Prism

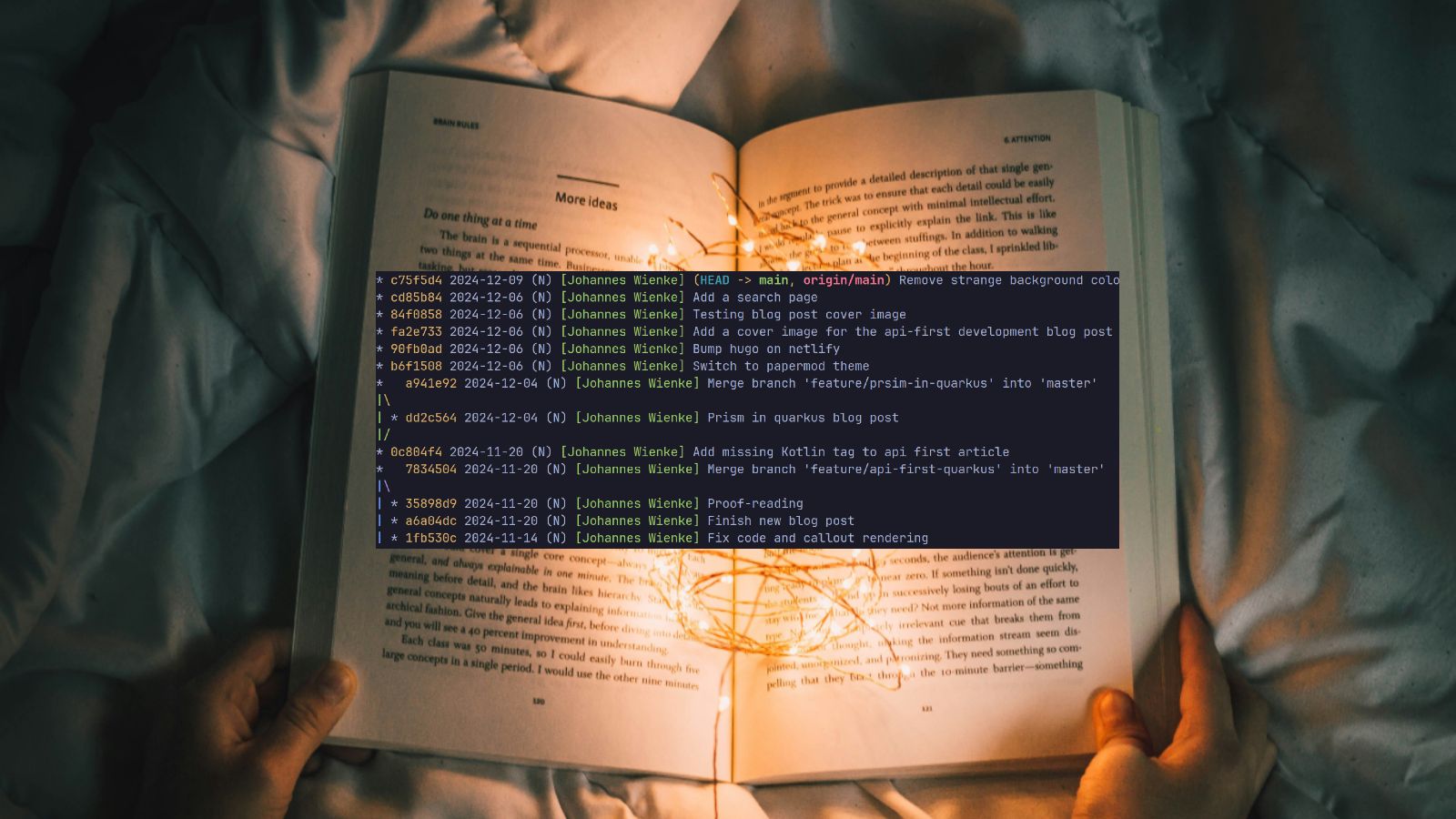

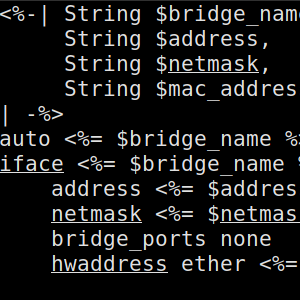

Quarkus is a good framework choice when developing RESTful APIs in an API-first manner by using the OpenAPI generator to generate models and API interfaces. That way, larger parts of compliance with the API specification are already handled by the compiler. However, not every inconsistency can be detected this way. In this post, I demonstrate how to integrate Stoplight’s Prism proxy into the test infrastructure of a Quarkus Kotlin project for validating OpenAPI specification compliance automatically as part of the API tests. Table of contentsMotivationValidating OpenAPI compliance with PrismIntegrating Prism into QuarkusImplementing a Quarkus test resourceRedirecting test requests through the test resourceDetecting and fixing a bug in the example projectUsing the test resource in integration testsTrading confidence for test execution timeSummary MotivationIn API-first development with Quarkus and Kotlin I have shown a basic setup for Quarkus to support API-first development. As a short recap, API-first development is an approach of developing (RESTful) APIs, where a formal specification of the intended API (changes) is created before implementing the API provider or consumers. That way, we can make use of the specification for code generation, parallel development, and for verification purposes. A common issue seen when using APIs based on their documentation is that the actual API implementation differs from what is documented. The setup shown before prevents larger parts of this problem by leveraging code generation through the OpenAPI Generator. Moreover, the compiler catches a few error cases when generated interfaces are not implemented properly. However, not every aspect of API compliance is validated this way and we would still be able to provide an implementation that deviates from the specification. Here’s a short example demonstrating one of the more obvious ways we can still deviate: 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 # ... paths: /pets: get: summary: List all pets operationId: listPets responses: '200': description: An array of pets content: application/json: schema: $ref: "#/components/schemas/Pets" # ... components: schemas: PetId: type: integer format: int64 Pet: type: object required: - id - name properties: id: $ref: "#/components/schemas/PetId" name: type: string Pets: type: array items: $ref: "#/components/schemas/Pet" ...